Hello camp friends, and thank you for taking a moment to think about evaluation during a time when evaluation seems less critical than the other big challenges you are likely facing right now. We know from previous blog posts that the evaluation systems you’ve built over time will survive, even if they are less of a priority for summer 2020.

We also know that shifts toward virtual or alternative programming do not necessarily mean shifts in your camp’s mission — your programs or activities might change, but the outcomes you want for your campers do not. Your logic model provides an excellent road map for what you might evaluate, even if you pivot to new program formats.

New program formats also mean new ways to think about keeping campers safe and ensuring a quality experience, both of which can be especially challenging in the virtual environment. Clearly you have lots to think about.

In this post, we offer some ideas for evaluating virtual programs to gain evaluation information from what you are already doing. In terms of the tree metaphor that we offered in our first blog in this series, the pieces of information we share today are like the sun and the rain that your trees are using to grow and thrive, even if you are not actively providing those nutrients.

As we’ve done before, we are organizing these ideas in terms of program operations or process evaluation and outcomes evaluation. Process (we can also call this program quality) and outcomes are not the same thing, but when you evaluate them together, you get a more complete picture of what your campers gained during their experience and what happened to foster those outcomes.

One caveat before we get started: try to avoid comparing your newly developed virtual programs with traditional, in-person programs you’ve run in the past. New programs — virtual or otherwise — require time to test, improve, and run smoothly, so it will likely take some time before your virtual programs are the well-oiled machine of your face-to-face programs. Instead, think of your virtual programs like you would any other new program: Would you compare a new backpacking program to your arts and crafts activities?

Ideas about evaluating process or program operations

- Reflect or debrief with your group as you close the virtual activity: What worked or didn’t work in the activity? Suggestions for future activities? Depending on your online platform, you might invite people respond to a poll, such as Poll Everywhere, to evaluate in real time.

- Measure satisfaction with a virtual program using a Net Promoter Score, by asking about likelihood to attend another virtual event, or asking about what people liked most and least, and asking for recommendations for the future.

- Ask staff about their experiences facilitating virtual programming, like “did you feel connected to the camp community?” or questions about personal or professional growth and sense of meaningful contribution.

- Use Facebook “Insights” to measure “Reach” and “Engagement” (post clicks and comments, likes, and shares) for your Facebook Live events, posts, and videos.

- Use YouTube “Analytics” to measure “average view duration” and “views.” For both Facebook and YouTube, you can select different time frames in which to view results. See which videos and posts seem to generate more reach and engagement, and discuss in your team the potential reasons for the results.

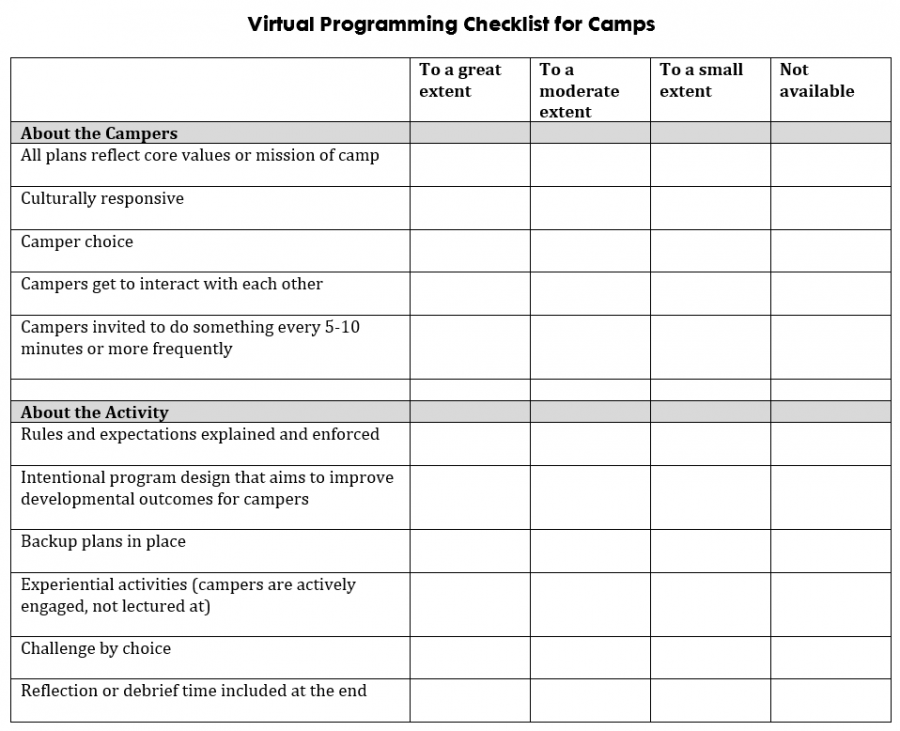

- Think about the quality of your virtual programs, like how did staff encourage campers to feel a sense of belonging? Or in what ways did this activity invite campers to make meaningful choices? To seek appropriate challenge? Here is an example of how one camp that is developing virtual programming is evaluating program quality:

Ideas about evaluating outcomes

- Use your reflection or debrief time to ask participants questions about what they got out of their virtual program experience—this is a great way to explore outcomes when the program is new or in a new virtual format.

- Ask campers to draw or create a photo journal about what they gained in the virtual program, maybe even using an online drawing app, and then gather staff via an online conferencing platform to review and interpret what campers share.

- Email a survey to campers (or parents and caregivers!) to measure the outcomes you hope campers will gain---you can even use ACA’s Youth Outcomes Battery.

- Once a month (or more frequently?) conduct online interviews or focus groups with five to 10 “frequent flyers” of virtual programs---what did you learn in this virtual program? What difference did this virtual program make in your life, if any?

Remember that you can combine process and outcome evaluations into one survey, interview, or focus group. For example, you might start out by asking your campers or families what they liked best or least about a virtual activity (process/satisfaction). Then you can move on to asking about what effect the virtual activity had on their knowledge, skills, attitudes, aspirations, or other short-term changes. Connect these potential effects to your camp’s values or goals for campers.

And finally, remember that, like any evaluation we do with campers, it’s important to be transparent about what you are collecting and how you are using it. Also, remember to share what you learn as quickly as possible with staff and possibly with campers, parents/caregivers, or other members of your camp community. Don’t worry about how neat or complete your evaluation might be—what matters is that you are learning from it and using it to continuously improve how you engage campers.

Laurie Browne, PhD, is the director of research at ACA. She specializes in ACA's Youth Outcomes Battery and supporting camps in their research and evaluation efforts. Prior to joining ACA, Laurie was an assistant professor in the Department of Recreation, Hospitality, and Parks Management at California State University-Chico. Laurie received her PhD from the University of Utah, where she studied youth development and research methods.

Ann Gillard, PhD, has worked in youth development and camps for more than 25 years as a staff member, volunteer, evaluator, and researcher.

Thanks to our research partner, Redwoods.

Additional thanks goes to our research supporter, Chaco.